Low Density Parity Check Codes

5G networks uses low density parity check codes (ldpc) for forward error correction of shared channels used to communicate user data over air interface. These channel codes:

achieve channel capacity for large block lengths

extremely robust against noise

scalable and efficient hardware implementation.

Low power consumption and silicon footprint.

Can be enhanced to support diverse payload sizes and code-rates.

easy to design and implement the low complexity decoder.

capable of considering both reliability and high data rates.

Important

LDPC codes are capacity achieving codes for large block-lengths which makes them suitable for high data rate applications.

Important

LDPC codes are used for forward error correction in PDSCH and PUSCH channels which carry user data in downlink and uplink respectively.

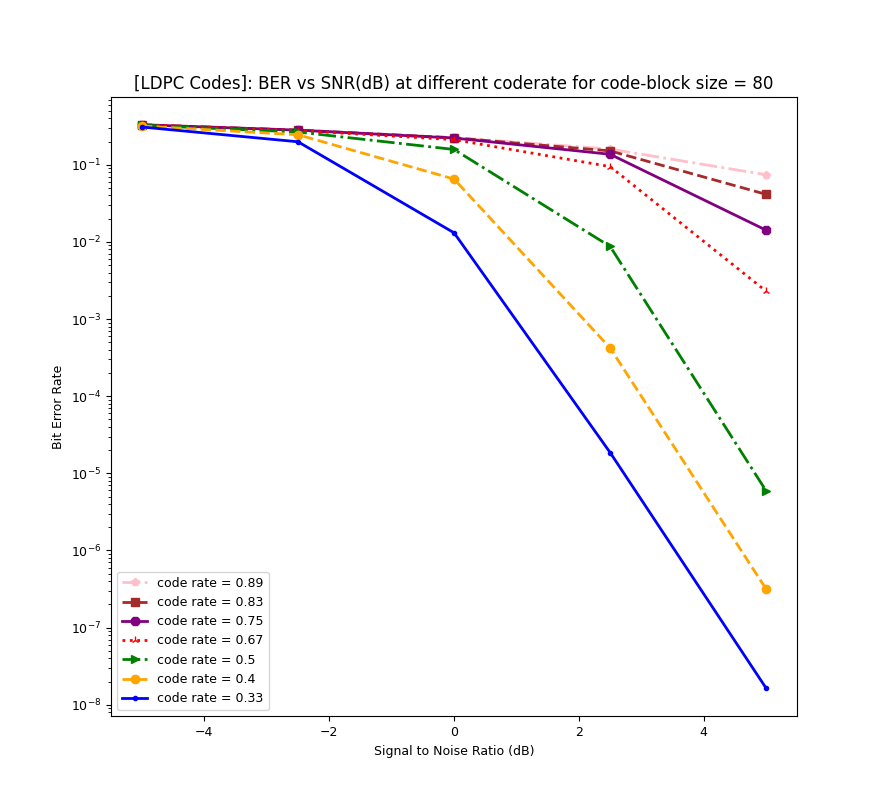

The reliability results of LDPC codes for a block size of 80 is shown below. For more comprehensive, analysis of LDPC codes, please refer to Tutorial-4.

LDPC Encoder

The details of the implementation of the LDPC encoder is provided in section 5.3.2 of [3GPPTS38212_LDPC]. There are few examples to illustrate how to use 5G complaint LDPC Encoder for simulations. The usage of 5G LDPC Encoder is slightly complex in comparison to standard LDPC coder because it allows only a certain fixed input sizes based on the lifting factor computed

based on transport block size and code-rate. This was done to have a flexible and scalable implementation of LDPC for

realtime fast and efficient use-cases.

tbSize = 32 # Transport block size

codeRate = 0.5 # code-rate = k/n

lpdcConfig = LDPCparameters(tbSize, codeRate)

k = lpdcConfig.k_ldpc # Value is 80

bg = lpdcConfig.baseGraph # Base-graph type "BG1" or "BG2"

zc = lpdcConfig.liftingfactor # Lifting factor

numCBs = lpdcConfig.numCodeBlocks # Number of code-blocks

numBatch = np.random.randint(1,10) # Number of Batches

# block of Bits to encode

bits = np.float32(np.random.randint(2, size = (numBatch, numCBs, k), dtype=np.int8))

encoder = LDPCEncoder5G(k, bg, zc) # Create LDPC Encoder Object

encBits = encoder(bits) # LDPC encoded bits

n = encBits.shape[-1] # size of the codeword

The details about the input-output interface of the LDPC Encoder is provided below.

- class toolkit5G.ChannelCoder.LDPCEncoder5G(k, bg, zc, dtype=tf.float32, **kwargs)[source]

Implements the 3GPP standards complaint LDPC Encoder for 5G-NR. The details of the encoder are provided in section 5.3.1 [3GPPTS38212_LDPC]. utilizes the Sionna’s LDPC encoder as base and fixes many broken compliances and further make it usable for uplink and downlink physical channel simulations.

- Parameters:

k (int) – Number of information bits (per codeword) to be encoded using LDPC.

bg – Defines the basegraph considered for LDPC encoding. It can take values from the set \(\{\)“BG1”, “BG2” \(\}\).

zc – LDPC Lifting Factor. It takes either scalar or numpy integer. Please refer to Table 5.3.2-1 of [3GPPTS38212_LDPC] for valid values.

dtype (tf.Dtype) – Defaults to tf.float32. Defines the output datatype of the layer (internal precision remains tf.uint8).

- Input:

inputs (([…,k], tf.float32 or np.float32)) – 2+D tensor containing the information bits to be encoded.

- Output:

[…,n], np.float32 – 2+D tensor of same shape as inputs besides last dimension has changed to n containing the encoded codeword bits.

- Raises:

ValueError – “[Error-LDPC Encoder]: ‘k’ must be integer”

ValueError – “[Error-LDPC Encoder]: ‘zc’ must be integer”

ValueError – “[Error-LDPC Encoder]: ‘bg’ must be string values from {‘BG1’,’BG2’}”

ValueError – “Unsupported dtype.”

ValueError – “i_ls too large.”

ValueError – “i_ls cannot be negative.”

ValueError – “Basegraph not supported.”

- property bm

Defines Base matrix for constructive parity check matrix for LDPC decoder.

- computeIls(zc)[source]

Select lifting as defined in Sec. 5.2.2 in [3GPPTS38212_LDPC]. zc is the lifting factor. i_ls is the set index ranging from 0…7 (specifying the exact BG selection).

- property i_ls

Set Index corresponding to lifting factor used for constructing the base matrix.

- property k

Number of information bits to be encoded using LDPC encoder.

- property n

Number of LDPC codeword bits before ratematching

- property n_ldpc

Number of LDPC codeword bits before pruning

- property pcm

Parity-check matrix for given code parameters.

- property z

Lifting factor of the basegraph.

LDPC Decoder

The class provides a belief propagation based 3GPP standards compliant LDPC decoder inherited from Sionna library. An example of how to use the LDPC decoder is given below.

# rxCodeword: Denotes the LLRs or the logits of the bits of dimension [..., n]

decoder = LDPCDecoder5G(bg, zc) # Creates an object for LDPC Decoder

decBits = decoder(rxCodeword) # Decoded Bits

The details of the input output interface of LDPC Decoder are detailed below.

- class toolkit5G.ChannelCoder.LDPCDecoder5G(bg, z, trainable=False, cn_type='boxplus-phi', hard_out=True, track_exit=False, return_infobits=True, prune_pcm=True, num_iter=20, stateful=False, output_dtype=tf.float32, **kwargs)[source]

This module implements the (Iterative) belief propagation decoder for LDPC codes which is compliant with 3GPP 5G NR standards. The implementation utilizes the Sionna’s LDPC decoder as base and fixes many broken compliances and further make it usable for uplink and downlink physical channel simulations. The details about the 5G LDPC codes are provided in [3GPPTS38212_LDPC]. It supports many functionalities provided by Sionna Decoder such as tractability and differentiabilility etc. The class inherits from the Keras layer class and can be used as layer in a Keras model.

- Parameters:

bg (str) – Defines the basegraph considered for LDPC encoding. It can take values from the set \(\{\)“BG1”, “BG2” \(\}\).

z (int) – LDPC Lifting Factor. It takes either scalar or numpy integer. Please refer to 3GPP TS 38.211 Table 5.3.2-1 for valid values.

trainable (bool) – Defaults to False. If True, every outgoing variable node message is scaled with a trainable scalar.

cn_type (str) – A string defaults to ‘“boxplus-phi”’. One of {“boxplus”, “boxplus-phi”, “minsum”} where ‘“boxplus”’ implements the single-parity-check APP decoding rule. ‘“boxplus-phi”’ implements the numerical more stable version of boxplus [Ryan]. ‘“minsum”’ implements the min-approximation of the CN update rule [Ryan].

hard_out (bool) – Defaults to True. If True, the decoder provides hard-decided codeword bits instead of soft-values.

track_exit (bool) – Defaults to False. If True, the decoder tracks EXIT characteristics. Note that this requires the all-zero CW as input.

return_infobits (bool) – Defaults to True. If True, only the k info bits (soft or hard-decided) are returned. Otherwise all n positions are returned.

prune_pcm (bool) – Defaults to True. If True, all punctured degree-1 VNs and connected check nodes are removed from the decoding graph (see [Cammerer] for details). Besides numerical differences, this should yield the same decoding result but improved the decoding throughput and reduces the memory footprint.

num_iter (int) – Defining the number of decoder iteration (no early stopping used at the moment!).

stateful (bool) – Defaults to False. If True, the internal VN messages

msg_vnfrom the last decoding iteration are returned, andmsg_vnor None needs to be given as a second input when calling the decoder. This is required for iterative demapping and decoding.output_dtype (tf.DType) – Defaults to tf.float32. Defines the output datatype of the layer (internal precision remains tf.float32).

- Input:

llrs_ch or (llrs_ch, msg_vn) – Tensor or Tuple (only required if

statefulis True):llrs_ch ([…,n], tf.float32) – 2+D tensor containing the channel logits/llr values.

msg_vn (None or RaggedTensor, tf.float32 or np.float32) – Ragged tensor of VN messages. Required only if

statefulis True.

- Output:

[…,n] or […,k], tf.float32 or np.float32 – 2+D Tensor of same shape as

inputscontaining bit-wise soft-estimates (or hard-decided bit-values) of all codeword bits. Ifreturn_infobitsis True, only the k information bits are returned.RaggedTensor, tf.float32: – Tensor of VN messages. Returned only if

statefulis set to True.

- Raises:

Assertion – “For stateful decoding, a tuple of two inputs is expected.”

Assertion – ‘Last dimension must be of length n.’

Assertion – ‘The inputs must have at least rank 2.’

Assertion – ‘output_dtype must be tf.DType.’

Assertion – ‘stateful must be bool.’

Assertion – “Internal error: number of pruned nodes must be positive.”

ValueError – “Basegraph not supported.”

ValueError – “i_ls cannot be negative.”

ValueError – “i_ls too large.”

ValueError – ‘output_dtype must be {float16, float32, float64}.’

Note

A lot more details are provided by Sionna in LDPC Decoder. We welcome everyone to go there are explore.

- property bm

Defines Base matrix for constructive parity check matrix for LDPC decoder.

- computeIls(zc)[source]

Select lifting as defined in Sec. 5.2.2 in [3GPPTS38212_LDPC]. zc is the lifting factor. \(i_{ls}\) is the set index ranging from \(0, \dots,7\) (specifying the exact BG selection).

- property i_ls

Set Index corresponding to lifting factor used for constructing the base matrix.

- property k_ldpc

Defines the number of LDPC decoded bits per codeword.

- property llr_max

Defines the maximum LLR value used for LDPC decoding to avoid saturation.

- property n

Size of codeword passed to decoder after adding truncated bits to the bits inputted to the LDPC decoder.

- property n_ldpc

Defines the size of codeword passed to decoder after rate de-matching.

- property nb_pruned_nodes

Defines Number of pruned nodes used for LDPC encoding/decoding.

- property prune_pcm

Defines Pruned parity check matrix (PCM) for LDPC decoder.

LDPC Codec Subcomponents

LDPC codec uses following submodules for preprocessing and post-processing which performs error detection, codeblock segmentation rate matching and codeblock concatenation. The details of these procedures are provided below.

- References:

- [3GPPTS38212_LDPC] (1,2,3,4,5,6)

3GPP TS 38.211 “Physical channels and modulation (Release 17)”, V17.1.0 (2022-03).

[Ryan] (1,2)Ryan, “An Introduction to LDPC codes”, CRC Handbook for Coding and Signal Processing for Recording Systems, 2004.

[Cammerer]Cammerer, M. Ebada, A. Elkelesh, and S. ten Brink. “Sparse graphs for belief propagation decoding of polar codes.” IEEE International Symposium on Information Theory (ISIT), 2018.